Furong Huang is an Associate Professor in the Department of Computer Science at the University of Maryland. Specializing in trustworthy machine learning, AI for sequential decision-making, and high-dimensional statistics, Dr. Huang focuses on applying theoretical principles to solve practical challenges in contemporary computing.

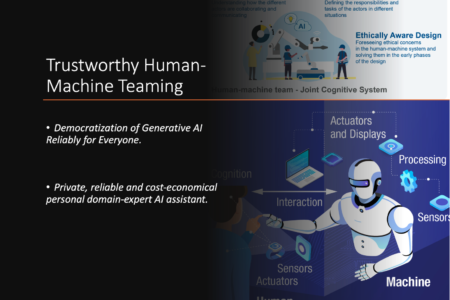

Her research centers on creating reliable and interpretable machine learning models that operate effectively in real-world settings. She has also made significant strides in sequential decision-making, aiming to develop algorithms that optimize performance and adhere to ethical and safety standards.

Student Highlights: 7 exceptional students from our group are on the job market this year, ready to bring their expertise to new frontiers! 6 of them are pursuing industrial roles, while 1 will be on the academic job market looking for postdoctoral and faculty positions.

These talented researchers work in cutting-edge areas, including AI Security, AI Agents, Alignment, Ethics, Fairness, and Responsible AI. They have deep experience with Generative AI, LLMs, VLMs, and VLAs, driving innovations in Weak-to-Strong Generalization and AI safety.

Don’t miss this opportunity to connect with these future leaders in AI. Check out their profiles here!

Academic Positions

-

2024 - present Tenured Associate Professor

University of Maryland

Department of Computer Science -

2017 - 2024 TTK Assistant Professor

University of Maryland

Department of Computer Science -

2016-2017 Postdoctoral Researcher

Microsoft Research NYC

Mentors: John Langford, Robert Schapire -

2010-2016 Doctoral Researcher

University of California, Irvine

Advisor: Anima Anandkumar

Selected Awards

MIT TR35

MIT Technology Review Innovators Under 35 Asia Pacific 2022

Visionaries

She makes AI more trustworthy by developing models that can perform tasks safely and efficiently in unseen environments without human oversight.

AI Researcher of the Year

Finalist of AI in Research – AI researcher of the year, 2022 Women in AI Awards North America.

Special Jury Recognition – United States, 2022 Women in AI Awards North America.

National Science Foundation Awards

National Artificial Intelligence Research Resource (NAIRR) Pilot Awardee.

NSF Computer and Information Science and Engineering (CISE) Research Initiation Initiative (CRII).

NSF Div Of Information & Intelligent Systems (IIS) Direct For CISE, “FAI: Toward Fair Decision Making and Resource Allocation with Application to AI-Assisted Graduate Admission and Degree Completion.”

Industrial Faculty Research Awards

Microsoft Accelerate Foundation Models Research Award 2023.

JP Morgan Faculty Research Award 2022.

JP Morgan Faculty Research Award 2020.

JP Morgan Faculty Research Award 2019.

Adobe Faculty Research Award 2017.

Research Projects

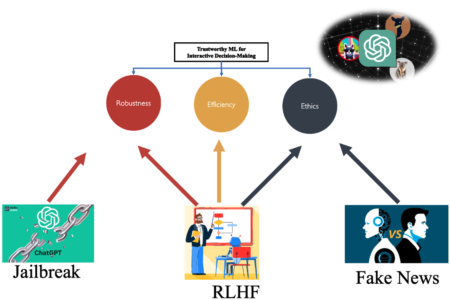

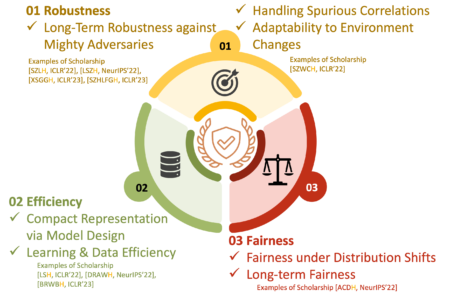

My research stands at the forefront, focusing on robustness, efficiency, and fairness in AI/ML models, vital in fostering an era of Trustworthy AI that society can rely on. My research fortifies models against spurious features, adversarial perturbations, and distribution shifts, enhances model, data, and learning efficiency, and ensures long-term fairness under distribution shifts.

With academic and industrial collaborators, my research has been used for cataloguing brain cell types, learning human disease hierarchy, designing non-addictive pain killers, controlling power-grid for resiliency, defending against adversarial entities in financial markets, updating/finetuning industrial-scale model efficiently and etc.

Specific Area of Research

Click Below

Contact Me

4124 The Brendan Iribe Center

Department of Computer Science

Center for Machine Learning

University of Maryland

College Park, MD 20740