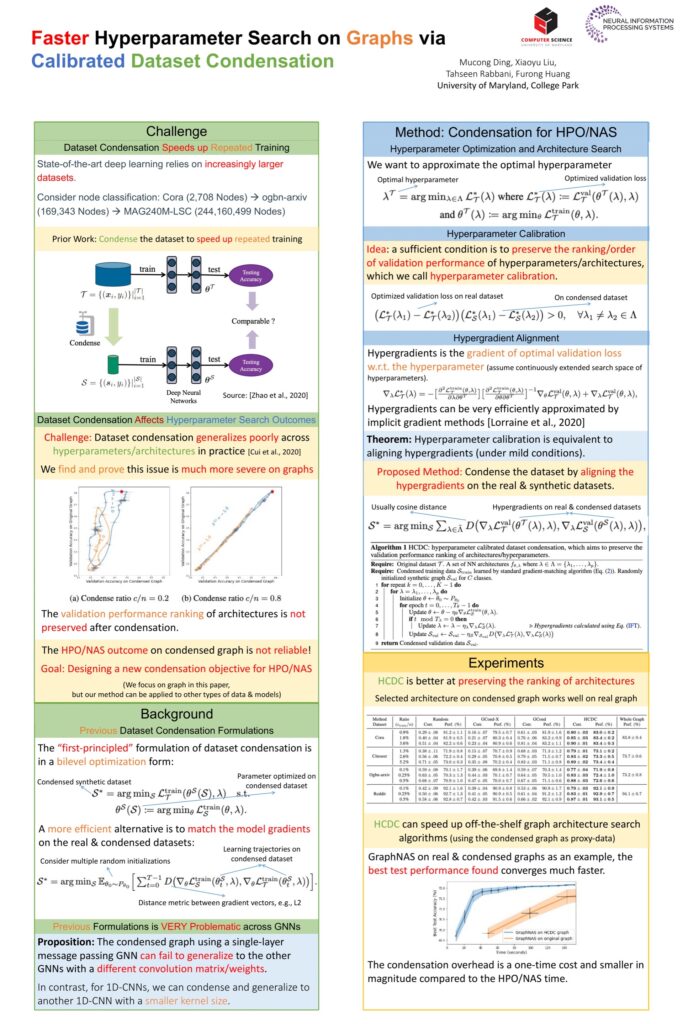

VIII. Dataset Condensation for Hyperparameter Search

Mucong Ding · Xiaoyu Liu · Tahseen Rabbani · Furong Huan, “Faster Hyperparameter Search for GNNs via Calibrated Dataset Condensation” Deep Reinforcement Learning Workshop at Neural Information Processing System (NeurIPS), 2022. Paper Link, Workshop Link Fri 2 Dec, 8:40 a.m. CST @Theater A, Oral Talk at 11:30-12:00 or 3:30-4:00 CST

Dataset condensation aims to reduce the computational cost of training multiple models on a large dataset by condensing the training dataset into a small synthetic set. State-of-the-art approaches rely on matching the model gradients for the real and synthetic data and have recently been applied to condense large-scale graphs for node classification tasks. Although dataset condensation may be efficient when training multiple models for hyperparameter optimization, there is no theoretical guarantee on the generalizability of the condensed data: data condensation often generalizes poorly across hyperparameters/architectures in practice, while we find and prove this overfitting is much more severe on graphs. In this paper, we consider a different condensation objective specifically geared towards hyperparameter search. We aim to generate the synthetic dataset so that the validation-performance rankings of the models, with different hyperparameters, on the condensed and original datasets are comparable. We propose a novel hyperparameter-calibrated dataset condensation (HCDC) algorithm, which obtains the synthetic validation data by matching the hyperparameter gradients computed via implicit differentiation and efficient inverse Hessian approximation. HCDC employs a supernet with differentiable hyperparameters, making it suitable for modeling GNNs with widely different convolution filters. Experiments demonstrate that the proposed framework effectively maintains the validation-performance rankings of GNNs and speeds up hyperparameter/architecture search on graphs.