Code Link: https://github.com/huiminzeng/WeightedTraining_AAAI

Paper Link: https://arxiv.org/abs/2010.12989

Distribution-aware attacker

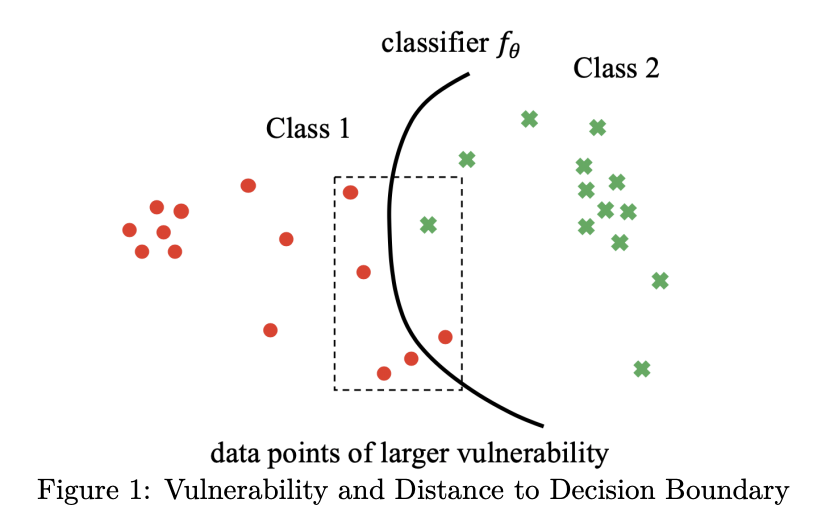

An attacker may not choose to attack the input examples uniformly. They might attack more vulnerable points.

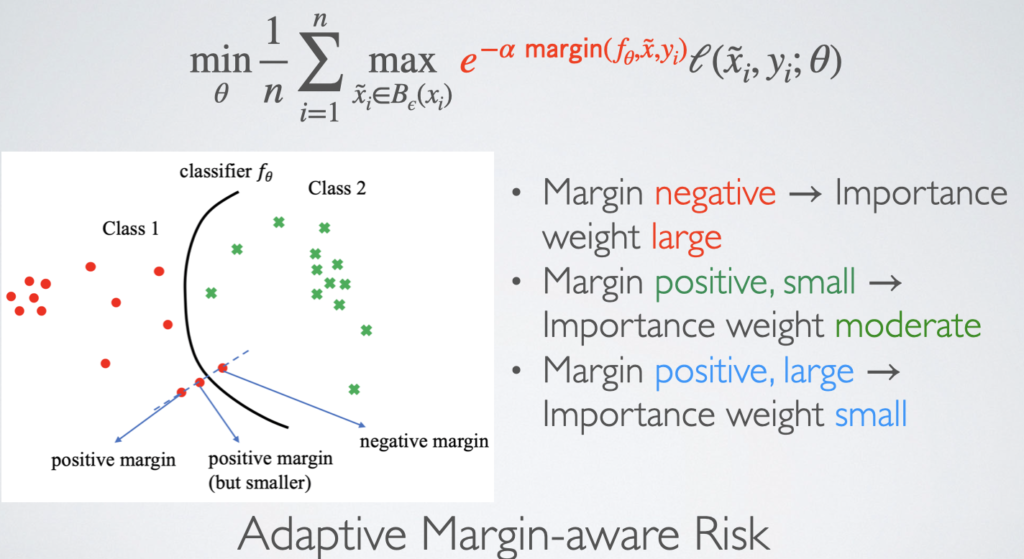

We propose a weighted minimax risk to improve the robustness of the model against adversarial perturbations that are distributed different from the training examples.