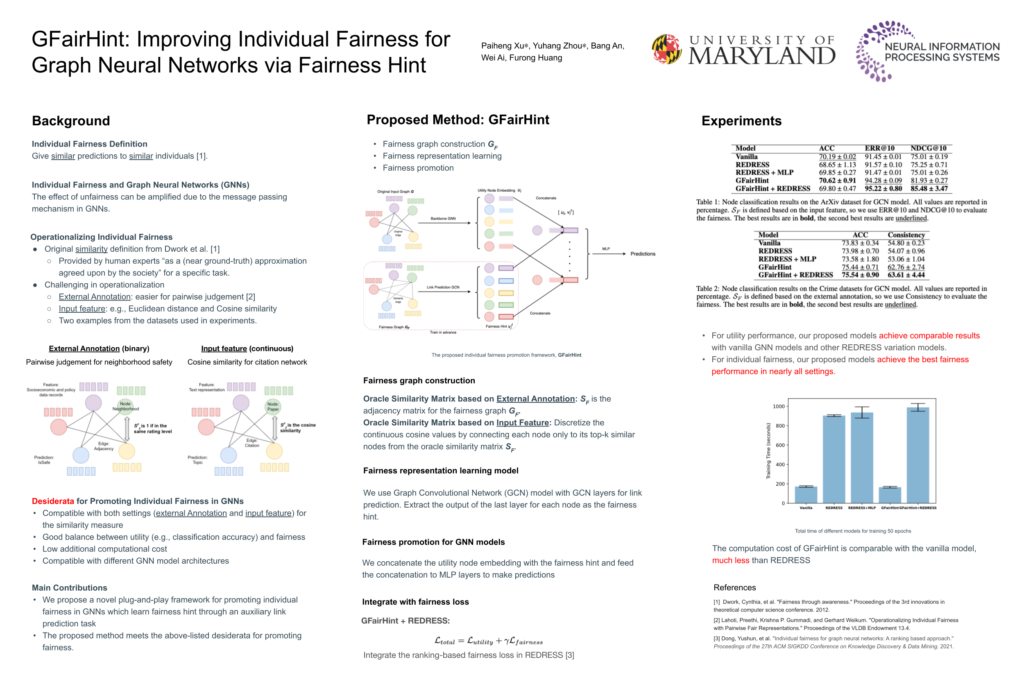

XII. Individual Fairness on Graphs

Paiheng Xu, Yuhang Zhou, Bang An, Wei Ai, Furong Huang, “GFairHint: Improving Individual Fairness for Graph Neural Networks via Fairness Hint”, Trustworthy and Socially Responsible Machine Learning Workshop at Neural Information Processing System (NeurIPS), 2022. Paper Link, Workshop Link Fri 9 Dec, 8:45 a.m. CST

Graph Neural Networks (GNNs) have proven their versatility over diverse scenarios. With increasing considerations of societal fairness, many studies focus on algorithmic fairness in GNNs. Most of them aim to improve fairness at the group level, while only a few works focus on individual fairness, which attempts to give similar predictions to similar individuals for a specific task. We expect that such an individual fairness promotion framework should be compatible with both discrete and continuous task-specific similarity measures for individual fairness and balanced between utility (e.g., classification accuracy) and fairness. Fairness promotion frameworks are generally desired to be computationally efficient and compatible with various GNN model designs. With previous work failing to achieve all these goals, we propose a novel method GFairHint for promoting individual fairness in GNNs, which learns a “fairness hint” through an auxiliary link prediction task. We empirically evaluate our methods on five real-world graph datasets that cover both discrete and continuous settings for individual fairness similarity measures, with three popular backbone GNN models. The proposed method achieves the best fairness results in almost all combinations of datasets with various backbone models, while generating comparable utility results, with much less computation cost compared to the previous state-of-the-art model (SoTA).