XIII. Adversarial Training in Multi-Agent RL

Xiangyu Liu, Souradip Chakraborty, Furong Huang, “Controllable Attack and Improved Adversarial Training in Multi-Agent Reinforcement Learning”, Trustworthy and Socially Responsible Machine Learning Workshop at Neural Information Processing System (NeurIPS), 2022. Paper Link, Workshop Link Fri 9 Dec, 8:45 a.m. CST (Outstanding Paper Award)

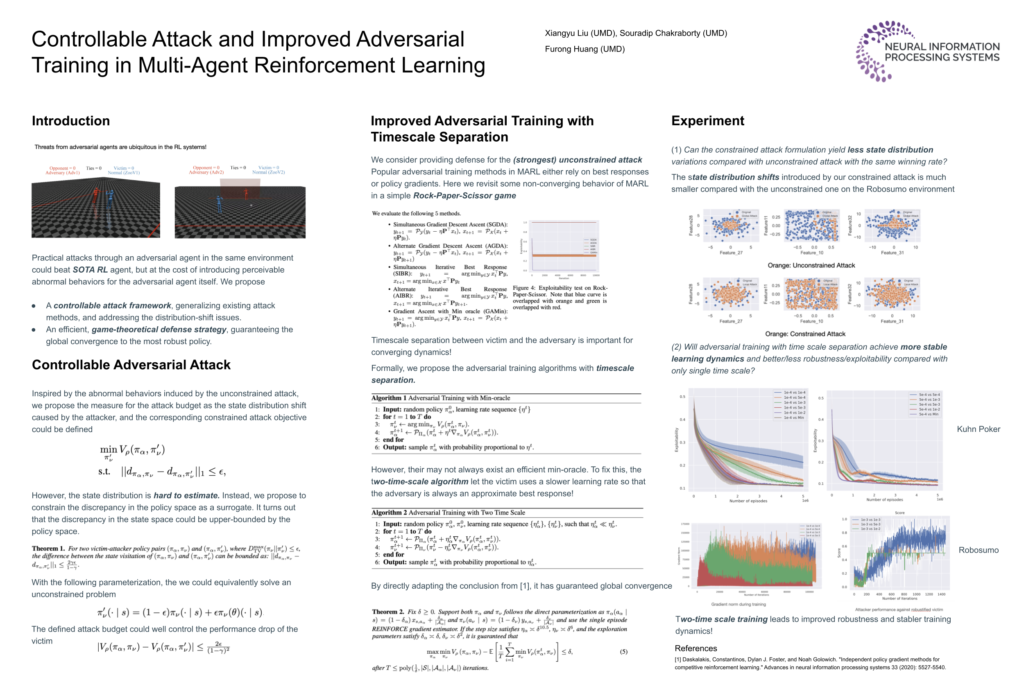

Deep reinforcement learning policies have been shown vulnerable to adversarial attacks due to the inherit frangibility of neural networks. Current attack methods mainly focus on the adversarial state or action perturbations, where such direct manipulations to a reinforcement learning system may not always be feasible or realizable in the real-world. In this paper, we consider the more practical adversarial attacks realized through actions by an adversarial agent in the same environment.It has been shown, in prior work, that an victim agent is vulnerable to behaviors of an adversarial agent who targets to attack the victim, at the cost of introducing perceivable abnormal behaviors for the adversarial agent itself. To address this, we propose to constrain the state distribution shift caused by the adversarial policy and offer a more controllable attack scheme by building connections among policy space variations, state distribution shift, and the value function difference. To provide provable defense, we revisit the cycling behavior of common adversarial training methods in Markov game, which has been a well-known issue in general differential games including Generative Adversarial Networks (GANs) and adversarial training in supervised learning. We propose to fix the non-converging behavior through a simple timescale separation mechanism. In sharp contrast to general differential games, where timescale separation may only converge to stationary points, a two-timescale training methods in Markov games can converge to the Nash Equilibrium (NE). Using the Robosumo competition experiments, we demonstrate the controllable attack is much more efficient in the sense that it can introduce much less state distribution shift while achieving the same winning rate with unconstrained attack. Furthermore, in both Kuhn Poker and Robosumo competition, we verify that the rule of timescale separation leads to stable learning dynamics and less exploitable victim policies.