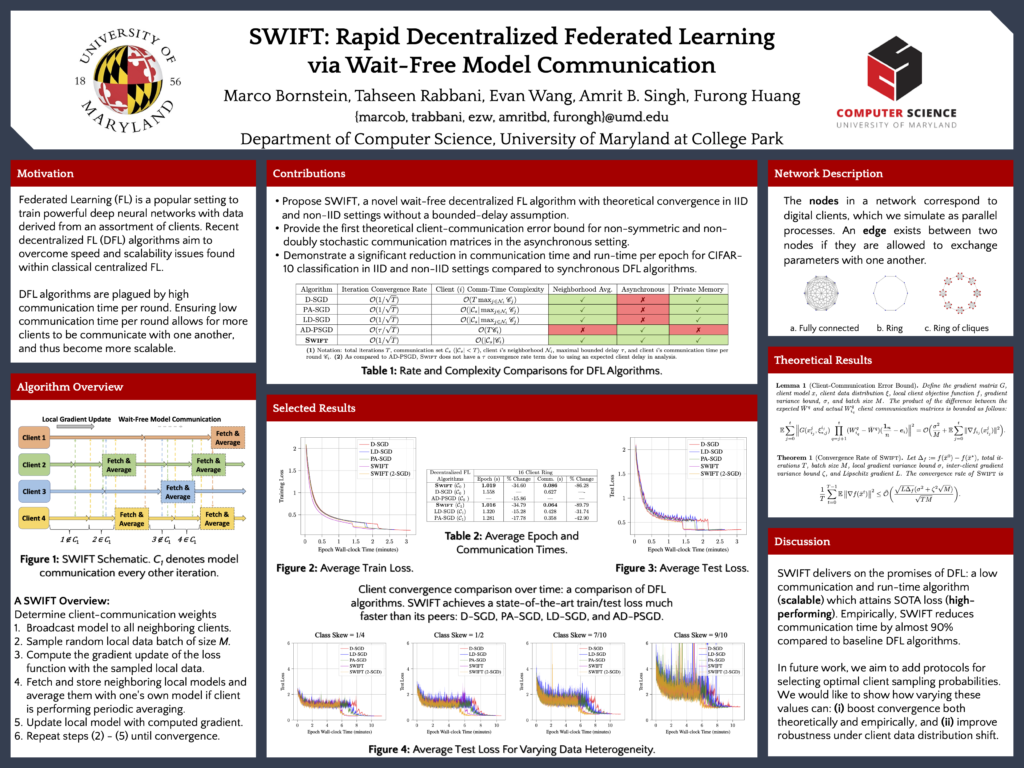

Workshop Papers

VII. Decentralized Federated Learning

Marco Bornstein · Tahseen Rabbani · Evan Wang · Amrit Bedi · Furong Huang, “SWIFT: Rapid Decentralized Federated Learning via Wait-Free Model Communication”, Workshop Federated Learning: Recent Advances and New Challenges at Neural Information Processing System (NeurIPS), 2022. Paper Link, Workshop Link Fri 2 Dec, 8:30 a.m. CST, Oral Talk at 11:00 am – 11:07 am CST @Room 298 – 299

The decentralized Federated Learning (FL) setting avoids the role of a potentially unreliable or untrustworthy central host by utilizing groups of clients to collaboratively train a model via localized training and model/gradient sharing. Most existing decentralized FL algorithms require synchronization of client models where the speed of synchronization depends upon the slowest client. In this work, we propose SWIFT: a novel wait-free decentralized FL algorithm that allows clients to conduct training at their own speed. Theoretically, we prove that SWIFT matches the gold-standard iteration convergence rate O(1/T) of parallel stochastic gradient descent for convex and non-convex smooth optimization (total iterations T). Furthermore, we provide theoretical results for IID and non-IID settings without any bounded-delay assumption for slow clients which is required by other asynchronous decentralized FL algorithms. Although SWIFT achieves the same iteration convergence rate with respect to T as other state-of-the-art (SOTA) parallel stochastic algorithms, it converges faster with respect to run-time due to its wait-free structure. Our experimental results demonstrate that SWIFT’s run-time is reduced due to a large reduction in communication time per epoch, which falls by an order of magnitude compared to synchronous counterparts. Furthermore, SWIFT produces loss levels for image classification, over IID and non-IID data settings, upwards of 50% faster than existing SOTA algorithms.